From Classification to Clinical Insights: Towards Analyzing and Reasoning About Mobile and Behavioral Health Data With Large Language Models

Abstract

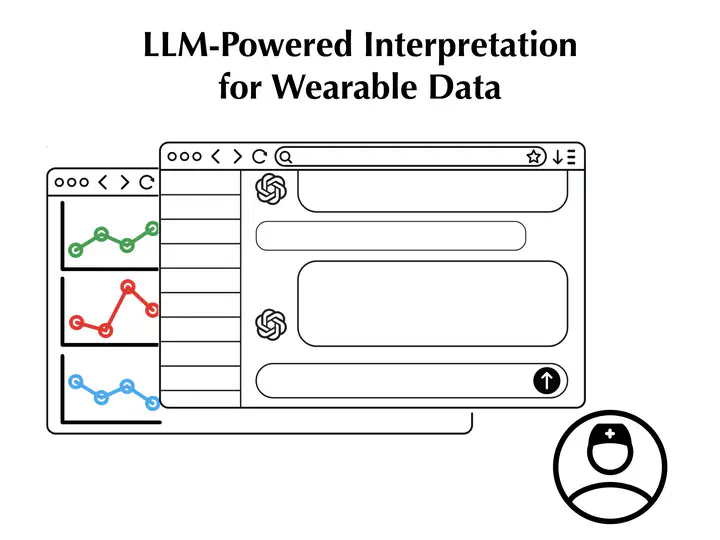

Passively collected behavioral health data from ubiquitous sensors could provide mental health professionals valuable insights into patient’s daily lives, but such efforts are impeded by disparate metrics, lack of interoperability, and unclear correlations between the measured signals and an individual’s mental health. To address these challenges, we pioneer the exploration of large language models (LLMs) to synthesize clinically relevant insights from multi-sensor data. We develop chain-of-thought prompting methods to generate LLM reasoning on how data pertaining to activity, sleep and social interaction relate to conditions such as depression and anxiety. We then prompt the LLM to perform binary classification, achieving accuracies of 61.1%, exceeding the state of the art. We find models like GPT-4 correctly reference numerical data 75% of the time. While we began our investigation by developing methods to use LLMs to output binary classifications for conditions like depression, we find instead that their greatest potential value to clinicians lies not in diagnostic classification, but rather in rigorous analysis of diverse self-tracking data to generate natural language summaries that synthesize multiple data streams and identify potential concerns. Clinicians envisioned using these insights in a variety of ways, principally for fostering collaborative investigation with patients to strengthen the therapeutic alliance and guide treatment. We describe this collaborative engagement, additional envisioned uses, and associated concerns that must be addressed before adoption in real-world contexts.